It’s time to talk. And quite frankly, I’m not thrilled.

If you don’t know who I am, hi. I’m Ritchie. I’m a 23 year old programmer who happens to also be blind. So blind, in fact, that although I can still somewhat see where I’m going and what I’m doing, I’m writing this on a 55 inch TV and still have to zoom in. Sometimes the letters are at least a foot tall. I was born with Leber Congenital Amaurosis, diagnosed with it at age 10, and treated at 21. That being said, the damage is done, and I will be blind for life. Oh well, it is what it is.

What I Do

At time of writing, I’m working on contract as an accessibility engineer for KDE e.V. At this point, if you use the Plasma desktop, there’s likely some of my code running on your system now even if you don’t actually use the features I’ve worked on.

Also at the time of writing, I’m working on my own custom game engine called Ritchie’s Toolbox. It’s an open-source game engine, intended for my up-coming game Socially Distant.

I’ve been working on making it so your friendly neighbourhood screen reader can actually read Ritchie’s Toolbox to you. Because accessibility is important. Especially when you’re the one writing the engine, and you’re the one barely able to read it.

I will warn you, this article has nothing to do with KDE whatsoever, so if you came for that, sorry. I want to wear my game engine hat and share my experience dealing with screen readers without the convenience of KDE Frameworks, QML, or Qt. Nope, instead, we’re doing this from scratch. Because I feel it’s important you know how frustrating that is to do.

How do screen readers see your screen?

I’ll gloss over the platform-specific details for now. Frankly, because they confuse me.

Screen readers read your screen using what’s called an accessibility tree. Your desktop environment, and each application you open, will create its own accessibility tree. The accessibility tree represents the layout of the application’s user interface, where things are, what things are, and how things can be interacted with.

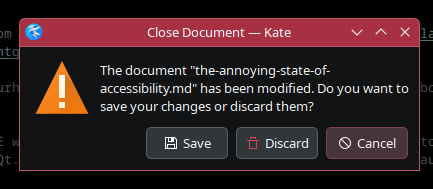

Consider this simple dialog box.

That dialog box would have its own accessibility tree, and it might look something like this:

0: Window: "Close Document - Kate"

1: Row Layout

2: Icon: "Warning"

3: Text: The document "the-annoying-state-of-accessibility.md" has been modified. Do you want to save your changes or discard them?

4: Dialog Actions

5: Button: Save

6: Button: Discard

7: Button, in focus: Cancel

The above example was abridged. In reality, accessibility trees can (and really should) contain A LOT more information than that. But I hope this at least helps you get the gist of what they are.

In accessibility trees, each UI element you see on-screen (and even ones you don’t see, like hidden screen reader labels on a website) has a corresponding node in the tree. Just like the UI element itself, each accessibility node can be assigned to a parent node, and can have any number of child nodes assigned to it. Generally, there’s also a root node, which represents the window itself.

If you look at the dialog box, you’ll notice the “Cancel” button is tinted slightly red. In my Plasma theme, this means the button is in focus. This is also reflected in the accessibility tree, the node for the button is marked as in focus. Only one node can be in focus at a time, just like you can only be typing in one text box at a time.

Okay, that’s great. But how does a screen reader see the accessibility tree?

Now you know where the trouble begins. Defining an accessibility tree in your application is the (mostly) easy part. I say easy part because, if you’re using an existing competent UI toolkit like Qt or GTK, it builds the tree for you. I say mostly easy because you still have to actually label things, and if you’re writing your own UI toolkit like I am, you also have to build the tree.

Let’s pretend for a moment though, that you have a perfectly labelled accessibility tree all set up in your app. How does Orca, Narrator, NVDA, VoiceOver, or TalkBack actually see it?

AccessKit

AccessKit is a modern accessibility tree library built in Rust, with official bindings for C and Python.

If you’ve written an accessible UI toolkit before, or, well, you’re blind, you may have heard of at-spi (the assistive technology service provider interface). On Linux, this is what screen readers like Orca use to communicate with other applications on your system to get their accessibility trees. AccessKit just wraps at-spi, as well as the other APIs for doing the same thing on other platforms.

I’m mentioning AccessKit because it’s what I chose to use in Toolbox. But Toolbox is neither written in Rust, C, or Python. Toolbox is an engine written in C# on the .NET platform. Before anyone criticizes me for writing a performance-critical application in a garbage-collected and JITted language, not the time or the place. I know what I’m doing, I know the choices I’m making, and I also have a strong belief that accessibility is important no matter what language or framework your application is written in. C# is a language that exists, and programs written in it should be blind-accessible, including Toolbox.

AccessKit in C#

It doesn’t exist.

No, I’m serious. I looked.

There’s The Intercept, a game made in Unity. What I linked to is a fork of it that integrates AccessKit as a proof of concept. Unity uses C# for game code, so you would think there would be C# bindings in here.

There’s AccessKit.cs which I thought would be the bindings. They might be, but that’s a suspiciously small file. These bindings look way too opaque.

With enough understanding, this isn’t a problem. I could port this out of Unity. But the last commit on the fork was several years ago, and the README states that:

It currently runs only on Windows. Like the original version of The Intercept, this fork uses Unity 5.3.4f1.

Unity 5 is an ancient, end-of-life build of Unity that - even if I wanted to install, the number one rule about Windows and Ritchies is work is never done on Windows systems. I am not putting myself through the pain of using Windows just to test that this works at all, nor do I want to port it to Linux.

So I generated my own bindings. Say hello to AccessKitsharp.

I generated them based on the C bindings, and they’re as true to the header as possible.

Getting the accessibility tree into AccessKit

I’ll spare you the gory details of the code I actually had to write, for now. Technical writeup will come later, likely to Patreon first.

Suffice to say, there were many - AND I MEAN MANY:

- Segmentation faults deep in libc code

- Stack overflows in hashbrown

- Double-frees

- Weird .NET errors I’ve never seen before

- Lack of debugability

- Confusion

And rather unfortunately, no documentation to help me out.

Rust memory safety means nothing to me

I don’t write my code in Rust. I don’t want to. People want me to, with memory safety being one of the main reasons why.

Here’s something to keep in mind: C# is also memory-safe. There may not be a borrow checker, but C# absolutely does do a good job of making sure you don’t double-free an object, index outside of an array, overflow a buffer, or shoot yourself in the foot with pointers. We have unsafe contexts too.

The reason Rust memory safety means nothing to me is that, just like in .NET, it breaks down the moment you need to interact with an unsafe C library or generally need to do something unsafe. Case and point, to the AccessKit devs, I absolutely did cause your code to double-free just by using the API the way I thought was correct. I did so because I was not aware of how your code manages memory, my code managed it differently, and you never told me you would free accessibility nodes after updating the tree. This meant I held onto those same pointers, reused them, and you crashed.

To the Rust fans out there, this is your reminder that you should never just rely on the language to save you from a double-free. Because the moment you let people interact with your library over C or a .NET P/Invoke call, your code is no longer memory-safe.

I’m Sad I Went Through This

I’m sad for many reasons.

I’m sad that C# doesn’t seem to be cared about when it comes to having access to accessibility toolkits, without needing to use an existing C# UI toolkit like Windows Forms, GTK#, or whatever else isn’t suitable for my needs.

I’m sad I can’t find documentation on how to even write an accessibility tree.

I’m sad that the C# bindings generator I used doesn’t document the bindings it generates, and that it even generated malformed C# syntax I had to fix.

I’m sad I can’t find API documentation for AccessKit itself, not its Rust API, not its C API.

I looked on the website.

There’s no wiki, on the Rust or C repositories.

Why I’m Sad

I’m not sad because I feel entitled to these things. I’m sad because it’s part of a bigger problem.

You may have read this article (“I Want to Love Linux. It Doesn’t Love Me Back: Post 1 – Built for Control, But Not for People”). So did I.

I truly feel that, based on my experience with KDE and my experience actually delving into the weeds with AccessKit in a custom UI system, that accessibility programming just isn’t accessible. Unless you happen to already understand the way each platform works, trying to find resources on how to actually let a screen reader know your UI exists is just painful. It’s going to involve reading code other people have already written. It’s going to involve hours, if not days, if not weeks of research and painful debugging. You likely won’t be able to ask many people for help, because they’ll know as much as you do.

You can’t out-Ritchie the Ritchie, and I absolutely was able to work everything out. AccessKit support is coming to Socially Distant, and that is absolutely fucking awesome given how text-heavy the game will be. People with more vision loss than me will be able to play the game and that’s important to me. That is awesome. But I have 13 years of programming experience, have a flexible job that gives me the time to actually investigate these things, and I regrettably have the patience and sheer stubbornness to actually pull it off as one person. Not everyone does, or should.

In order to improve accessibility on the Linux desktop, the absolutely most important thing is documentation. It isn’t enough for the toolkits like GTK and Qt to have the APIs you need to label widgets. It’s important to be able to understand how the underlying accessibility stack actually works. These APIs need to be available to use in every major language and framework in use, not just the fancy new ones like Rust or the ancient footguns like C. These things need to be easy to integrate into game engines, into application frameworks, into desktop environments, and the web.

If it means setting up some kind of central wiki where we can all, as a community, pool together the fragmented resources on how the hell accessibility works, then so be it - sysadmin is somehow easier for me.

Right now, learning accessibility programming feels barely accessible to me. If I’m struggling with it with my prior experience, then I have little hope of newbie programmers being able to figure it out, and that’s bad. Let’s fix this problem.

I wanted to add…

I’ve been sitting on this post for weeks wondering if I should actually put it out. The fact you’re reading this means I made that decision, finally. Over the weeks spent deliberating it, I did thankfully learn more about the screen reader stack on Linux. I still stand by the fact that it’s just not in a great place right now and needs to be better, but you should also know I still have a lot to learn about how screen readers actually work.

Furthermore, the reality is these tools and libraries aren’t maintained by large teams of people. If I recall correctly, AccessKit is maintained by just one or maybe a few people. This should absolutely be kept in mind, since in my own experience, maintaining (and documenting) such a complex piece of software with a very small team is just hard. To that end, while I am frustrated about documentation being hard to find or hard to access, some slack should be given. It isn’t for a lack of trying.

With that all said, I hope you can appreciate this post as a first-hand account of what it’s like to learn accessibility programming.